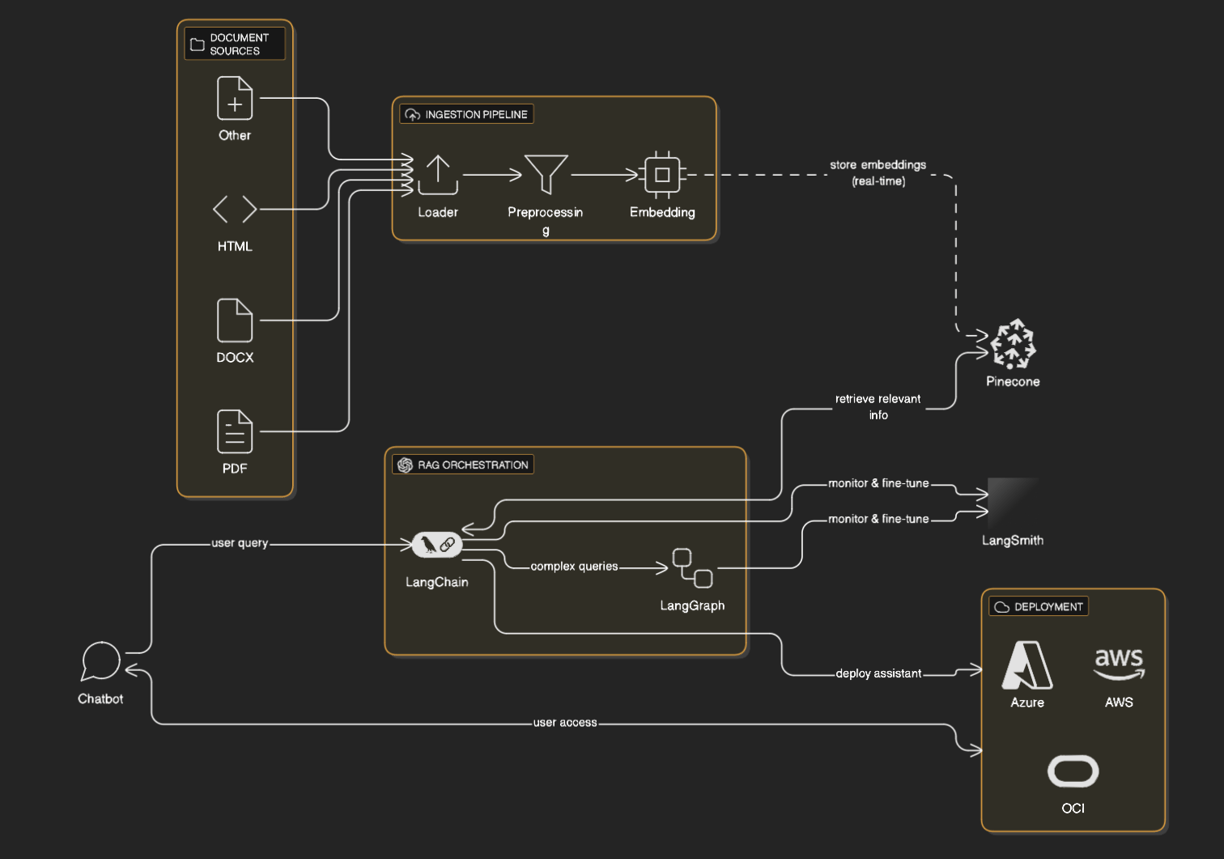

How to Build a Knowledge Base AI Agent Using LangChain, LangGraph, LangSmith, Azure, AWS, and OCI

GENERATIVE AI

Subbu D

3/1/20252 min read

Artificial Intelligence agents that can understand, recall, and reason over enterprise data are at the heart of digital transformation. In this blog, we’ll walk through building a Knowledge Base AI Agent using cutting-edge open-source tools like LangChain, LangGraph, and LangSmith, integrated with cloud services from Azure, AWS, and OCI (Oracle Cloud Infrastructure).

Whether you're developing an AI assistant for internal documentation, customer support, or compliance management, this guide provides a modular, production-ready architecture for scalable, reliable solutions.

🔧 Tools & Technologies

Component Tool/Service

Framework LangChain

Workflow Engine LangGraph

Observability LangSmith

Embedding/LLM Services. Azure OpenAI, AWS Bedrock, OCI AI Services

Vector Store Pinecone, ChromaDB, FAISS, Azure Cognitive Search, Amazon Kendra, or OCI Search with OpenSearch

Storage Azure Blob, Amazon S3, or OCI Object Storage

🧠 Step 1: Define the Use Case

Let’s say you're building an AI assistant that answers questions based on your company’s internal documentation stored across different formats (PDFs, DOCX, HTML, etc.).

Your objectives:

Ingest unstructured documents

Embed & store knowledge in a vector database

Use LangChain to orchestrate retrieval-augmented generation (RAG)

Build multi-step reasoning with LangGraph

Monitor pipeline and fine-tune via LangSmith

Deploy securely via Azure, AWS, or OCI

📥 Step 2: Ingest and Chunk Documents

Use LangChain’s document loaders to ingest your data.

from langchain.document_loaders import DirectoryLoader, PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

loader = DirectoryLoader('data/', glob='**/*.pdf', loader_cls=PyPDFLoader)

documents = loader.load()

splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

docs = splitter.split_documents(documents)

🧬 Step 3: Generate Embeddings

Option A: Azure OpenAI

from langchain.embeddings import AzureOpenAIEmbeddings

embeddings = AzureOpenAIEmbeddings(

deployment="your-embedding-deployment",

openai_api_key="your-key",

openai_api_base="https://<your-resource>.openai.azure.com/",

openai_api_version="2023-05-15"

)

Option B: AWS Bedrock (Titan Embeddings)

from langchain.embeddings import BedrockEmbeddings

embeddings = BedrockEmbeddings(model_id="amazon.titan-embed-text-v1")

Option C: OCI AI Embedding

Use the OCI Generative AI SDK with LangChain’s CustomEmbeddings.

🧠 Step 4: Store Embeddings in a Vector Store

Option A: Azure Cognitive Search

LangChain supports Azure Search out of the box.

from langchain.vectorstores import AzureCognitiveSearch

vectorstore = AzureCognitiveSearch(

azure_search_endpoint="https://<your-service>.search.windows.net",

azure_search_key="your-key",

index_name="docs-index",

embedding_function=embeddings

)

Option B: Amazon Kendra / OpenSearch

For more tailored solutions, use OpenSearch or integrate with LangChain’s FAISS/Chroma for local dev.

🤖 Step 5: Create a RAG Chain with LangChain

from langchain.chains import RetrievalQA

from langchain.chat_models import AzureChatOpenAI

llm = AzureChatOpenAI(deployment_name="gpt-4", temperature=0)

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=vectorstore.as_retriever()

)

🔄 Step 6: Build Reasoning Workflows with LangGraph

LangGraph lets you define complex workflows as graphs of LangChain components.

from langgraph.graph import StateGraph

from langchain.schema.runnable import RunnableLambda

builder = StateGraph()

builder.add_node("question", RunnableLambda(qa_chain))

builder.add_edge("question", "end")

graph = builder.compile()

result = graph.invoke({"input": "What is our refund policy?"})

For complex flows (e.g., classification → summarization → answer generation), define multiple nodes and conditional branches.

📊 Step 7: Monitor and Debug with LangSmith

LangSmith captures traces, errors, and performance metrics.

import os

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "your-langsmith-key"

Run your pipeline and monitor everything in the LangSmith dashboard. You can tag runs, evaluate prompt quality, and visualize graphs.

☁️ Step 8: Deployment via Azure / AWS / OCI

Deploy LangChain Agent as a FastAPI app:

from fastapi import FastAPI

app = FastAPI()

@app.post("/query")

def query(input: str):

result = qa_chain.run(input)

return {"response": result}

Hosting Options:

Cloud Hosting Option

Azure Azure App Service / Azure Kubernetes Service

AWS. ECS / Lambda / SageMaker Endpoint

OCI. Oracle Functions / OCI Data Science Notebook

Security Tip:

Use managed identity or secret vaults (Azure Key Vault, AWS Secrets Manager, OCI Vault) for API keys and credentials.

📌 Bonus: Real-time Collaboration with LangGraph Agents

Use LangGraph’s agent support to build agents that can:

Ask clarifying questions

Search internal systems

Escalate to a human when confidence is low

✅ Conclusion

By combining LangChain's orchestration, LangGraph’s flow control, LangSmith’s observability, and the power of Azure, AWS, or OCI cloud ecosystems, you can build powerful, scalable Knowledge Base AI agents tailored for real-world enterprise needs.